Floor Vanden Berghe

“Can we play language games with computers?”

Floor Vanden Berghe is a fine arts student. From his interests in game theory, epistemology and artificial intelligence, among others, he developed a practice in which he subversively pits knowledge and algorithms against each other. Below is an excerpt from his thesis, in which he reflects and expresses concerns about the relationship between humans and machines.

To survive as an organism within a complex system, insights that name causalities are very valuable. How to computationally generate these insights that can take us to a favorable end result within an axiomatic system is described in game theory. Artificially intelligent systems within computational machines can generate these insights through brute force computing. As humans, we can create simplified models of the world and feed them as initial propositions to such systems, which in turn make inferences about how to efficiently move toward a goal in those models. However, those models that feed algorithmic, "artificially intelligent" systems are incomplete. They form a framework where a part, sometimes intentionally chosen, falls within or outside that framework and where what is within that framework forms a desirable composition.

In contrast, the axioms of our context as humans are not known. Science, investigating such initial propositions of our reality, seems to be an endless “work in progress.” It seems that so far artificial intelligence is useful only within defined subdomains. That artificial intelligence, unlike humans, seems unable to keep an overview wading through several contradictory axiomatic domains. The same seems true for science, which so far cannot provide a comprehensive explanation, but always delineates more subdomains. If you place such an artificially intelligent system in an unknown area, it will be anything but efficient and science will divide that unknown area. Absolute logic seems convenient for survival within delimited domains, and for denying the unknown. But what if we find ourselves in new uncharted territory where the delineation of the playgrounds we employ turns out to be nonsensical?

Why do humans thrive in that chaotic environment and machines so far only in controlled environments? Culture is a world-shaping thing that helps man survive by imparting compressed insights from the past. Culture seems to evolve organically with civilization and consist of passing on ideas or insights into objects or information sequences. That culture seems to evolve by passing on those ideas with mutations. For the transmission of those information sequences (on which culture relies), language seems fundamental. The objects that embody those insights compressed are often interpreted linguistically as analogies. That interpretation or decompression of the idea is ambiguous, and the linguistic interpretation depends on the linguistic context in which a subject makes that interpretation. The mutations or noise in the transmission of ideas seem to be in function of a complex subjective perspective-bound linguistic context. So those modulations in ideas do not appear to be random and not to occur according to computational brute-force methods we recognize from game theory. That “random” seems to me to be a word often used synonymously with “unintelligible, incalculably complex” or “uncompressible chaos.” Places where "random" is replaced by computable become logical machine systems.

In human language and computer language, there seems to be an inherent difference. Language in humans is composed of words and is non-hierarchical and analogous. In the absence of formalized encoding and confusing navigation in that linguistic hierarchy, noiseless information transmission seems impossible. The analog nature of language in humans seems to allow for an infinite continuity of information. In that continuity, one can point in a direction, but never absolutely define what is being pointed to (Ding an Sich). That, in turn, allows paradoxes and that, I believe, because of its analogous, infinite nature, makes analogy valuable as a method. The method of analogy, naming something with a similar structure, but with a certain variation seems to be a reflection of what we are as human beings: similar, difficult to pin down beings with a certain variation in form.

In machines, language seems to be made up of bits and they are swung back and forth in formally established hierarchical systems. In such digital system, noiseless identical copies are possible. Incidentally, that machine formal form of information processing seems to reflect what those machines are: atomically constructed mechanisms that must be defined and predictable. That formal, hierarchical machine language seems incapable of communicating in analog continuous systems where, when pointing to a point, that point can never be described absolutely.

Science, absolute logic and code seem to delineate fields, and within those fields to be very efficient. Culture, as we know it today, and with which we have evolved side by side, thrives on human analog communication, dialogue, which mutates ideas according to a subjective linguistic context. The digital computer age and a societal pedagogical preference for STEM subjects, which came about because of a geo-political preference for technological efficiency, seem to see absolute logic, noiselessness as a desirable form of communication.

Human language with its contradictions and analogies seems fundamental to culture as a mechanism for survival in uncharted territory. Getting rid of that method of communication with contradictions and metaphors seems to imply that our terrain is known and will remain so, which sounds both vain and depressing to me. At the same time, socially the weight on formal, logical, absolute communication seems to be a conservative political choice. In doing so, it seeks to move in a system whose starting points are claimed to be known, in which man is a cog or interchangeable atom in a predictable machine.

In human communication, information seems to be colored by the general context of the subject. This context is not one selective, circumscribed playground, but all-encompassing. There are various axiomatic systems in which the subject engages, as well as a speculative interpretation of the unknown around it. That coloring is what gives consumption and reading of information from that subject within that system of interdependence a value: a context-based modulation of an idea, shared to be selected (if it is a valuable insight or idea to navigate in both known and unknown territory). At the same time, that subjective modulation of ideas, insights, interpretations is needed to map the unknown and to make a choice in which direction to explore the unknown.

Ideas appearing as information sequences determine human actions. Technological advances make those human actions very potent when it comes to changing the environment. The environment is changing at an exponentially accelerating rate and many organisms, dependent on slow genetic evolution, seem to be going under. It seems that technology and culture made this hole for us and must fill it at the same time. We as humanity seem like a junkie on a self-destructive path. An increasing part of communication is done digitally, hampering noise in information sequences and suggesting formalism which seems to hinder evolution of culture and reduce the value of the subject. Culture as a control system seems less able to evolve through artificially intelligent digital communication channels, while the environment is changing at an ever-increasing pace. More than ever, it seems important to be conscious and nuanced about which ghosts we believe in, and which ghosts we talk about, and how we allow which ghosts to reproduce.

Projects by Floor Vanden Berghe

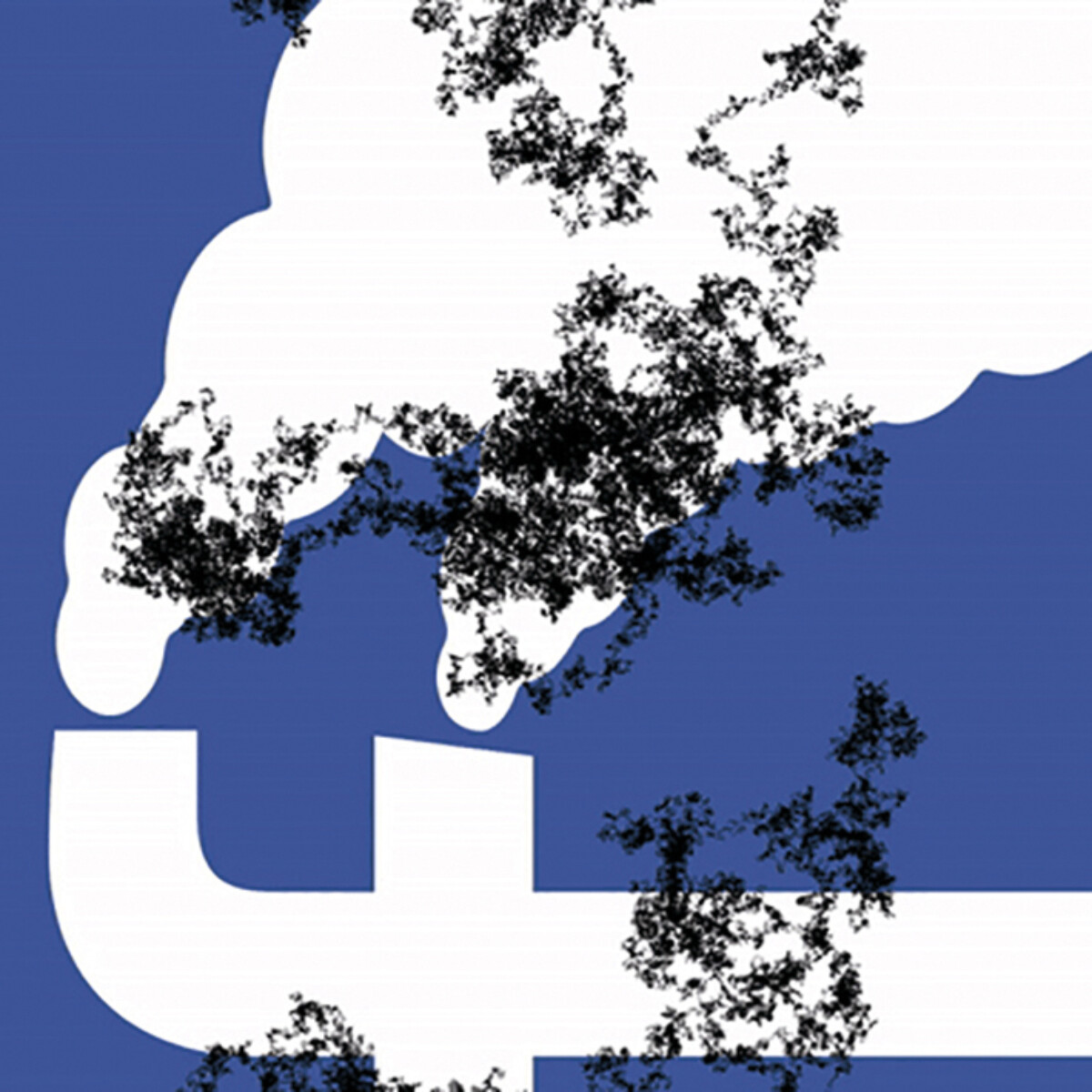

Profiling pollution is an extension that randomly scrolls and likes your facebook feed. It tries to confuse algorithms that do profiling.

Antipedia scans wikipedia for content that was added but removed in a subsequent revision. Antipedia is an interactive website that gives users information by saying what something is not.

The book An essay on why language is deprecated has 474 pages of text generated by GPT-2, an algorithm. In the book, an artificially intelligent algorithm reflects on the use of language.

Text: Régis Dragonetti.